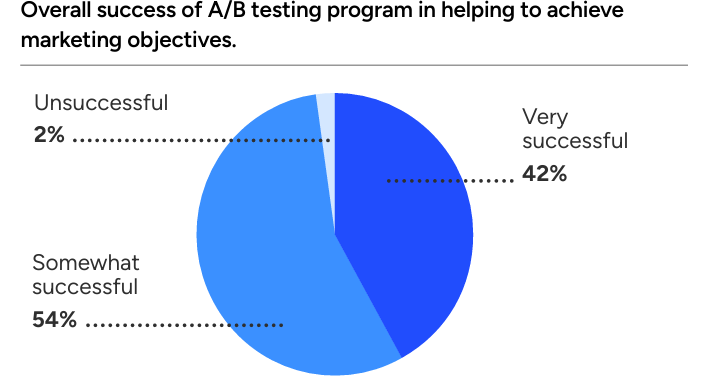

A/B Testing is a Must and 98 Percent SMBs Feel Pretty Positive with Results

In the B2B world, where buying cycles are lengthy, decision-makers hesitate, and leads are scarce, A/B testing is often touted as the growth lever your marketing team needs.

And to be clear: the data backs up. According to the 2025 report by Unbounce and Ascend2, almost every SMB that runs experiment reports at least some success, with 42 percent calling their program “very successful”.

Another striking data point is that 98 percent of SMBs find A/B testing somewhat resourceful. Those headline numbers are real, but they sit beside a harsher truth. More than half of respondents report a lack of resources to conduct proper tests.

Let’s walk you through why B2B brands need A/B testing, what positive impact it delivers, where it breaks down, and how to stop spinning your wheels.

The B2B experiment economy

For B2B marketers, experimentation is both a promise and a problem. The promise is obvious: validated changes to landing pages, email flows, pricing pages, or demo funnels translate into measurable improvements in conversion rates, lead quality, and ultimately revenue.

The problem is practical: B2B funnels are complex; conversions are rare, and teams are thin. So, while most SMBs report that testing yields value, the path from hypothesis to repeatable lift is littered with operational traps.

The data makes that duality plain — confidence in testing coexists with complaints about resources, traffic, and time.

A/B testing is not an optional extra, it’s insurance

B2B buying decisions are multi-stakeholder and often expensive. That makes each conversion more valuable, and each mistake more costly.

A/B testing is less about flashy wins and more about risk mitigation: you validate messaging for niche verticals, confirm which demo CTAs actually trigger sales conversations, and discover incremental improvements that compound across expensive paid channels. When marketers present a CFO with a percentage increase in qualified leads, backed by experimental data, the conversation shifts from faith to fact.

For teams that rely on account-based marketing and personalized outreach, A/B testing supplies the micro-signals that scale those programs efficiently.

The upside of A/B testing

When companies run smart tests with clear hypotheses, adequate traffic (or reliable micro-conversions), and the right tooling, they see consistent, usable wins.

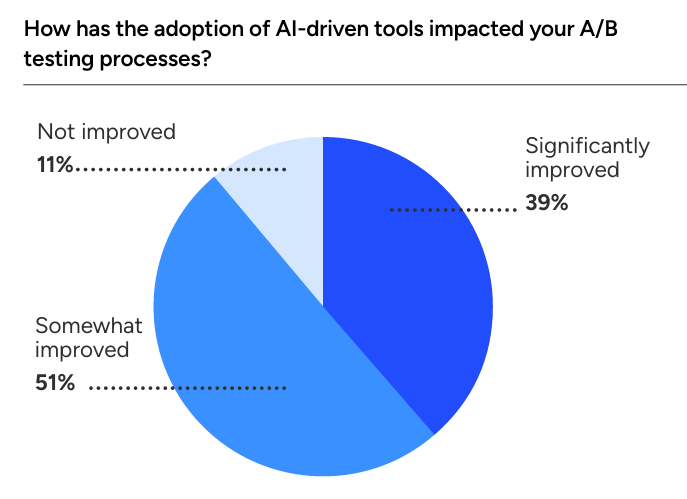

The SMB survey’s 42% “very successful” cohort reflects that reality: these are the teams that prioritize test design, measure business-focused metrics, and fold learnings back into product and sales processes. AI and automation are accelerating that curve for many SMBs by speeding up analysis and surfacing patterns that would otherwise take weeks to manually stitch together.

Those gains are often modest on a per-test basis but meaningful at scale: even small percentage improvements to high-value conversion points compound into real ROI.

Yet many brands feel stuck

The same studies that celebrate testing also catalog the blockers.

First, resource scarcity is a real concern: 51% of respondents identify a lack of tools, time, or budget as a core constraint. Without a dedicated owner or at least a focused squad, tests are deprioritized in favor of immediate campaign work.

Second, B2B traffic scarcity destroys statistical power. If your primary goal is a demo booking or an enterprise trial request, reaching confidence can take months — and in that time, seasonality or product changes can invalidate your test.

Third, long set-up and analysis cycles sap momentum; 39% of SMBs say tests take too long to be practical.

Finally, bad hypothesis design and vanity metrics produce noise: teams test flavors of copy or button color without an underlying theory of buyer behavior, and they reward clicks rather than downstream revenue-correlated actions. These factors turn a scientific method into a checkbox exercise.

Why “some success” is not the same as scalable CRO

There’s a conceptual gap between being “somewhat successful” and running a scalable optimization engine.

Many SMBs report incremental wins, but those wins are often isolated and non-transferable. A positive test on a top-of-funnel asset does not necessarily uplift deal close rates downstream. Moreover, self-reported success is subjective: what one team calls “very successful” might be a steady trickle of improvement rather than a transformative leap in conversion.

The practical upshot is that letting A/B testing rest on ad hoc wins without tightening methodology, segment discipline, and measurement will stall any attempt to turn experiments into predictable growth.

Fixing the cracks with radical solutions

The path out of mediocrity is operational, not mystical. Reframe your conversion goals to include micro-conversions that correlate with revenue, allowing you to run meaningful tests with less traffic.

Hire or assign a CRO point person who owns a quality and statistical discipline hypothesis. Shorten test cycles with modular, hypothesis-driven experiments and use automation or AI to accelerate analysis. Prioritize tests on pages and flows that amplify paid spending or improve sales efficiency.

Finally, pair quantitative tests with qualitative signals — heatmaps, session replays, and micro-surveys — so you don’t misread noise as a signal. These steps won’t make every test a winner, but they will increase your hit rate and allow learning to compound.

Cut to the chase

A/B testing in B2B delivers value for nearly everyone who bothers to do it, but the real challenge is turning that value into a repeatable engine. The headline states that most SMBs see some success as encouraging; the uncomfortable follow-ups about resources, traffic, and timing explain why too many programs stall.