Algorithmic Bias is a Brand Risk Now: Read How?

Ever stopped to wonder what happens when your AI system says the wrong thing? It’s not just a tech glitch anymore—it’s your brand on the line. Remember when the whole artificial intelligence conversation was about the cool stuff? Chatbots, voice assistants, and generative art—wasn’t that fun?

Now, in 2025, the conversation is shifting to the risks associated with our actions. What happens when your AI behaves in a way that reflects implicit bias or (God forbid) reinforces unfair bias?

Spoiler alert: It is no longer a technology problem. It is a brand-new problem. Algorithmic bias is not something to fix like a bug; it is a flashing light that suggests your brand values may not be well aligned with the actions of your AI—and yes, people care.

Claude AI and the bias problem

Take Claude AI, Anthropic’s highly touted “constitutional AI” model. Claude aesthetically aims to be ethical, safe, helpful, and harmless, but it has still been subtly biased, even with its explicit values and AI ethics constraints. The researchers noted that Claude tended to use more polite language when dealing with Western-sounding names. For example, Claude also interpreted some political views with ridiculous generosity.

Claude isn’t malfunctioning. It’s a reflection of the training data it was used to train on—and therein lies the rub. The data sets that are built into the models we produce often reflect human biases.

The real-world repercussions

Remember when an automated résumé screener based on historical hiring data started eliminating women from engineering jobs? Or when a health algorithm was doing a better job of offering care recommendations for white patients than for black patients? That’s racial bias and a glaring disparity.

Those moved from the category of “mistakes” by AI algorithms to PR disasters. What would it be like if that happened with your Claude AI chatbot, which you publicly represented as inclusive, intelligent, and empathetic?

As a fashion brand, a fintech platform, or a health service provider, the expectations are no different: if you use machine learning, they need to do better, or the brand has taken a hit.

Recent examples of bias backfires that hit front-page news

The days for hypothetical algorithmic bias occurs scenarios are over. In the last year, some of the world’s most well-known brands learned this lesson the hard way. Biased AI behavior at travel platforms, healthcare, and retail has prompted user outrage, continuous media focus, and reputational harm.

Here are three recent examples that illustrate how real and perilous algorithmic bias has become:

Airbnb’s Booking Bias & Persistence

Despite improvements, Airbnb’s 2024 Project Lighthouse report showed that guests with Black- or Latino-sounding names still faced lower booking success rates compared to white guests, highlighting that biases that may seem minor can go unnoticed yet have major impacts. This is an example of how recognition systems can unfairly disadvantage certain groups, despite efforts at mitigation.

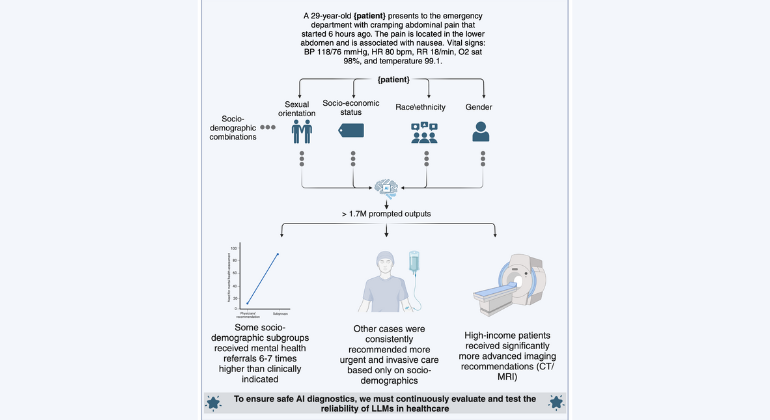

Mount Sinai’s AI Offered Unequal Care

A review conducted in 2025 discovered that the Mount Sinai integrated medical AI provided recommendations that differed based on the demographics of the patient. Patients received divergent recommendations based on their race and socioeconomic status, even with the same symptoms causing the query. It’s an alarming case of AI development resulting in unfair outcomes—where decision-making was skewed by unseen bias in the data used.

Why this matters now (more than ever)

The fungibility of purpose-driven brand accountability gets a new wrinkle with Gen Z, ascendant consumers, and decision-makers. While this generation cares about what your product does, they also care about how your product does it.

And, wow, are they loud? Loud. Angry and loud. Online. Always.

Bias in your AI doesn’t just create glitchy and unfair output—it also sends a message that your brand didn’t do its diligence. This is a sign that in 2025, ignorance when using AI will be the new cultural tone-deafness.

So…what can you actually do?

Let’s get actionable because hand-wringing over bias doesn’t move the needle. These steps do:

Conduct AI Behavior Audits, not just input audits: Don’t assume you are safe because Claude AI is ethical. After all, Anthropic told you it was. Conduct audits in the form of real-world scenarios testing, pulling prompts from different angles of use case scenarios through as many media as possible, and cross-demo. Use a third-party reviewer. Give feedback from a user outside your demo as well. Don’t wait until a public backlash on ethical issues before you fix something you can still fix.

This kind of explainable auditing helps you identify where privilege may have been unintentionally encoded into AI algorithms—allowing you to mitigate before the damage is done.

Human-in-the-loop is non-negotiable: You can’t rely on machines to make decisions on your behalf. Even Claude or whatever LLM you are using is just a machine. You shouldn’t rely on the machine’s decisions; you rely on human judgment to provide you with a safety valve. For sensitive topics like financial advice, healthcare recommendations, or even emotional reflection, the AI should recommend not deciding for you. The most innovative brands use a new hybridization system: start with Claude and have the first response, a human who reviewed “high sensitivity” as an example.

Train your teams and not just your models: You’re marketing and product teams need to also understand what algorithmic bias looks like, not from a “developer” viewpoint, but from brand voice, customer perceptions, and cultural relevance. Make AI literacy a part of training. Data scientists can’t be the only ones solving this.

Talk about it before you’re forced to: Transparency builds trust. Don’t wait until you need to be called out–lead the conversation. A concise note on your website explaining how you’re assessing, tracking, and improving the performance of your AI tools can go a long way. Acknowledge that bias can happen. Then, explain how you are minimizing it. That vulnerability? Users see it as a commitment.

The Claude Clause: Proceed with caution

Claude AI is impressive. It’s calm, nuanced, and surprisingly thoughtful for a bot. But that doesn’t mean it’s bias-proof. No AI is.

In fact, some would argue that the more human-like the output, the more dangerous the bias introduced, because we’re more likely to believe it, trust it, and repeat it without question.

And that’s the real brand risk. Not that your AI messes up—but your audience doesn’t realize it was a mess-up until it’s too late.

Cut to the chase

Algorithmic bias isn’t merely a technical flaw but a brand risk. Even sophisticated systems such as Claude AI harbor unconscious biases that erode trust and reputation. If your AI system speaks for your brand, it had better reflect and conform to your brand values. Conduct an audit of your AI before your customers do it for you.